Everyone who promotes their site and plans to seriously develop their business in the future should know what parsing is. This phenomenon is so common that it is 100% impossible to protect against it. Parsing is a method of fast processing of information, more precisely parsing of the data placed on web pages. It is used for prompt processing of a large number of texts, numbers, images.

More about parsing

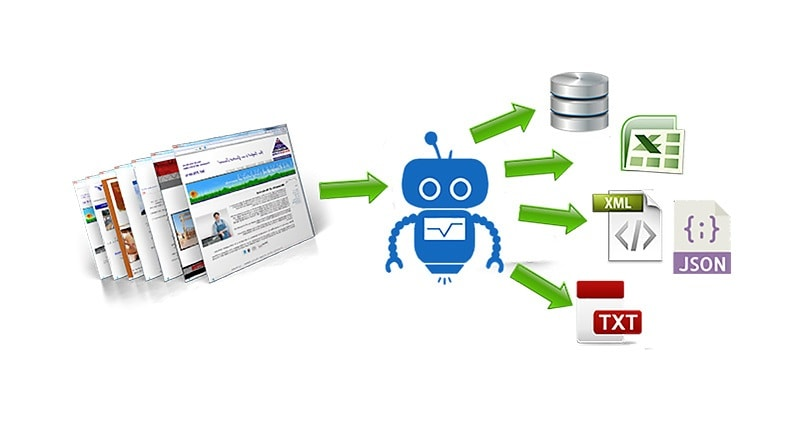

Simply put, parsing is the collection of information from other people’s sites. Parsites – collect and analyze data from various sites using special programs. The essence of this process can be described as follows: the bot goes to the resource page → parses the HTML code into separate parts → separates the necessary data → saves in its database. Google’s robots are also a kind of parser, which is why it’s so difficult to protect your site from spyware, because in parallel, you can restrict access to search engines.

Usually parsing causes only a negative attitude, although it is not illegal. Parsing is about collecting information that is freely available, the program simply allows you to speed up the process. If parsing is used correctly, you can find many benefits.

Why do you need parsing

Gathering information on the Internet is a troublesome and hard work, so it is almost impossible for a person to systematize it manually. Whereas parsers can go through a huge amount of resources in one day. What is parsing used for:

- Price policy analysis. To focus on the average price of a product, you need to look at hundreds of sites that do it manually is simply unrealistic.

- Change control. If you use parsing regularly, you can easily track price changes from competitors and navigate the news.

- If the store has thousands of products, parsing will help to systematize the site, including finding blank pages or other errors.

- Filling cards in the online store. Manually describing thousands of products is difficult, plus it takes too much time. Parsing will help to do it much faster.

- Creating a customer base. This is especially true for spammers. Parser is sent “on a journey” on social networks, where he collects phones and e-mail addresses.

Parsing is also useful for working with keywords. After making the necessary settings, you can quickly select the desired queries.

What interests parsers

The law of the Internet – content is stolen from everyone. Owners of web resources love to fill the site with someone else’s content, although not only unique information is harmful – positions sag in search, and sometimes get banned. Therefore, to protect yourself, you need to know what and how piglets.

Not only bots are used for copypaste. Successfully steal and “hands”. This mainly applies to texts and images. Texts remain the basis for successful promotion. But Google usually always prefers the original source, even if the article is completely copied.

How to protect yourself from parsing

Content needs to be protected from the beginning and not wait for the site to become known. This is especially true for young resources, because if they take the content of trust sites, Google may take them as the primary source. Methods of protection:

Prohibition oncopying text. This is done using microcode, but saves only if the text is copied manually. And a good specialist can easily circumvent this ban. It does not save from automatic parsing.

Using reCAPTCHA. This method is also not very effective, because you can bypass the captcha in many ways.

Paid services. For a fee, the service monitors the content. When a copy is found, a letter arrives in the mail. It is even possible to write a complaint to Google to delete the copied text. This method is quite popular in Europe and the United States.

Blocking bots by IP address. Effective if information is stolen in large quantities and on a regular basis. But this method has a significant disadvantage – the site can be blocked for search engine robots.

Add a link. A script with a link to the original source is attached to the text. It is advisable to insert the script inside the text – then it is more likely that the links will not be seen or deleted.

It is difficult to fight with copypasters, but it is possible. You can write a complaint to the search engine support service. At the international level, there is legal protection of content – Digital Millennium Copyright Act.

What to do if the texts were not deleted and the site was searched? The most effective way is to try to regain lost ground. You can try to do it yourself, and the best option is to seek the help of professionals.